The Modern Data Platform Series: Part 2 - Apache Iceberg in Minutes

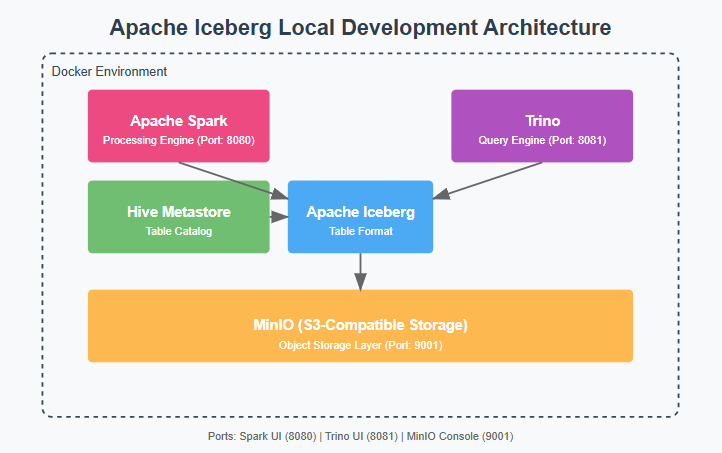

Spark + Iceberg + Trino + Minio + Hive Metastore

This guide will help you quickly set up a modern data lake environment on your local machine using Apache Iceberg. The setup includes Trino, Spark, and Apache Iceberg for data warehousing, with MinIO as the object storage backend. By the end of this article, you’ll be able to run analytics efficiently using Iceberg tables on Spark—all from your local system.

This local setup provides a perfect sandbox environment for developers and data engineers to experiment with modern data lake technologies without the complexity and cost of cloud infrastructure. By running everything locally, you can quickly iterate on your data models, test different ingestion patterns, and validate query performance—all from your laptop.

10-Minute Setup for Production-Grade Data Lake

What makes this setup particularly valuable is that it mirrors a production-grade data lake architecture while being completely containered and locally runnable. Within 10 minutes, you get:

A fully functional Iceberg-powered data lake

Multiple query engines (Spark and Trino)

S3-compatible storage

A production-grade metastore

All running through Docker containers

This means you can develop and test your data pipelines locally with the same technologies you'd use in production, ensuring a smooth transition when you're ready to deploy.

Component Deep Dive

Let's explore each component and its role in this architecture:

Hive Metastore as the Catalog

Serves as the central catalog for all Iceberg tables

Maintains table schemas, partitioning information, and snapshot metadata

Enables consistent table access across different query engines

Trino for Analytics

Offers lightning-fast SQL queries on Iceberg tables

Supports complex analytical workloads

Apache Spark for Data Processing

Handles efficient data ingestion into Iceberg format

Supports both batch and streaming workloads

Provides powerful data transformation capabilities

MinIO as S3-Compatible Storage

Replicates AWS S3 functionality locally

Provides a consistent storage interface across environments

Includes a web console for easy data management

Enables testing of S3-specific features without cloud costs

Why Apache Iceberg?

Apache Iceberg is a high-performance table format designed for large analytic datasets. Unlike traditional table formats, Iceberg excels in:

Schema Evolution: Supports adding, dropping, or renaming columns without rewriting entire datasets.

Partition Flexibility: Dynamically optimize queries using hidden partitioning, removing the need for rigid manual partitioning.

ACID Transactions: Provides robust data consistency and reliability with support for snapshot isolation.

Query Engine Agnostic: Seamlessly integrates with Trino, Spark, Presto, Flink, and other processing engines.

Why Use Spark with Iceberg?

Spark is a distributed processing engine that pairs perfectly with Iceberg for:

Efficient Query Execution: Leverages Iceberg’s partition pruning and metadata for faster reads.

Scalability: Handles massive datasets distributed across multiple nodes.

Flexibility: Runs batch and streaming workloads efficiently.

By combining Apache Iceberg and Spark, you can achieve a powerful and efficient data warehousing setup locally.

Quick Start with the GitHub Repository

This GitHub repository provides a pre-configured environment for experimenting with Apache Iceberg, Spark, and Trino. By following the README steps, you can:

Set up a modern data lakehouse environment in minutes using Minio.

Explore Apache Iceberg’s features locally.

Leverage Spark for scalable processing and Trino for SQL querying.

Whether you're testing schema evolution, optimizing partitioning, or running analytics, this setup is a perfect starting point for your data engineering journey.

Step 1: Clone the Repository

# Clone the repository

$ git clone https://github.com/howdyhow9/iceberg_demo_1.git

$ cd iceberg_demo_1Step 2: Follow the README Instructions

The repository README contains comprehensive instructions to:

Create Required Directories:

mkdir -p data/iceberg/osdp/spark-warehouse postgres-dataStart the Services:

docker-compose up -dAccess Service Interfaces:

Trino:

http://localhost:8081Spark Master UI:

http://localhost:8080MinIO Console:

http://localhost:9001Run Spark Scripts:

Submit scripts for Iceberg processing as detailed in the README.

The source data file must be added to the MinIO bucket with the following specifications:

• Storage path: s3a://source-data/yellow_tripdata_2016-01.csv

• File size: 1.6 GB

Run the Spark SQL session script

docker-compose exec spark python3 /opt/spark/work-dir/scripts/spark_sql_session_iceberg.pySubmit a basic Spark job

docker-compose exec spark spark-submit /opt/spark/work-dir/scripts/basic_spark_iceberg.py